Patients and caregivers adapting to new equipment may use them in error. But it is possible to minimize mistakes by applying the principals of human factors and conducting usability studies early enough in a design project.

Paul Dvorak, Founding Editor

The idea of a human-centric design is not new. It may have gotten its start in WWII, when aviation psychologists suggested a standardized instrument layouts for all U.S. aircraft. Before implementing HCD, pilots transitioning from aircraft to aircraft had to relearn the location of instruments and controls, and those that did not adjust quickly often suffered fatal mishaps.

Today, applying the principles of human-centric design to a bewildering array of medical devices is one way to minimize errors in hospitals and homes.

“About a decade or so ago, the FDA began paying more attention to the increasingly complexity of medical devices,” says Debbie McConnell, human factors lead at Battelle Laboratories. “In 2007, the FDA wrote guidance that said, in effect, if a company wanted a successful submission, it had to demonstrate its product was likely to be used as intended – and the medical device manufacture could not use the justification that, ‘The user did not follow instructions.’”

In response, the Association for the Advancement of Medical Instrumentation (AAMI) provided guidance. The standard, ANSI/AAMI HE75:2009, describes the type of summative evaluation a medical device must undergo. “For example, you must have at least 15 individuals of a kind who will use the device in the study that comes at the end of the development cycle. Otherwise, the FDA will not approve the submission.”

A monster flop

Batelle human centric design staff members Andrew Sweeney, Annie Diorio-Blum, and Neha Kalra participate in a brainstorming session to generate product concepts.

For those who think a usability study is unnecessary, consider the promising diabetes drug, Exubera, and the delivery device that allowed taking it by mouth – no more needles. “The product got all the way through testing and then failed in the field,” says Battelle Program/Project Manager Carol Stillman. “It passed from the product performance standpoint, and it was a safe device. What the developer did not do was [consider] the user-experience side.”

The pharmaceutical company’s plan was that a person just about to eat – seated at a table – would take the drug as soon as they saw the food. But the device was large and conspicuous. Worse, it looked like the user was taking a hit off a bong, an impression some thought too embarrassing. And taking the drug early, say before entering a public space, did not provide the therapeutic effect. Users found the product to be too inconvenient and chose not to use it.

“Insulin pens have been designed to look like ball-point pens, not a medical device, and so people can use them in a restaurant without drawing attention to themselves,” said Stillman. One solution to the Exubera design, she suggests, might have been to make it look more like something that everyone has in their bag.

Bottom line: After 11 years of development, the device was pulled from the market. The Wall Street Journal reported that the insulin flop cost the company $2.8 billion.

Early user research and formative usability studies

During early user research, human factors engineers go into the field to watch people work with exiting products and figure out where gaps are, such as how today’s medical devices are inadequate, or how people accommodate for a design’s shortcomings. “It’s fascinating to watch people find a way to use a product. What people put in place to fill usability gaps are big indicators of what should be present in the next generation of the device. We watch people work with a device in a natural setting and then interview them, taking lots of notes about the environment, such as the noise, lighting, complexity in the space, and the likelihood of being interrupted, or how mobile the device might be, and how conspicuous or inconspicuous it must be. For example, if a home is the setting, we might ask who else lives there besides the patient who might use the device? If the device is used in a surgical space, how will the surgical team use it, and how does the surgical team interact with one another? That’s a huge part of the design. This type of research takes place early in the product design lifecycle. It helps the designers understand the context of use. Hence, it’s called contextual research,”

McConnell explains.

After early concept sketching takes place, a group of intended users can be brought into an observation room and asked to react to a picture, 3D model, or a collection of possible future designs. “Non-function models can include 3D-printed components. During all our usability studies, we create an environment that’s as close as possible to one that represents where the device will actually be used. Then we put nonfunctioning components in the environment and say to a subject, ‘Imagine you want to accomplish a particular task. Walk me through what you would do. Pick things up, move them around, and tell me what you are thinking and how you would use these components.’ It’s astounding what you learn watching someone use an early approximation of a product. This is referred to as formative work,” she says.

McConnell tells of working on a device that had a predecessor that was already commercially available. The study’s goal was to find if a change to the physical form would differentiate it enough so that people would use it as intended and without confusing it with the commercially marketed predecessor. “We were interested in watching people pick up the model and move it around, to determine whether the design alone would be sufficient to indicate its use. For instance, we watched to see if the device was held the right way. You learn a lot just by asking folks to pick up a non-functional prototype,” she notes.

A recent FDA requirement

The clumsy insulin dispenser was so embarrasing to user they became nonusers. The developer conducted no user studies before production.

The FDA must see value in such studies, because it’s recently required them in submissions. “Now we have a whole other side of requirements. It was hard enough to design a device when companies had functional requirements and biocompatibility,” McConnell says.

“But we hope that over the next decade, people understand that the new user interface requirements are not just an extra assignment. When we have early versions of the device in design, we conduct formative studies to see if the user interface requirements are met and if the users will be able perform the tasks they need to do. When started early, the study makes the rest of a design process much easier because it answers usability questions such as, ‘How will the alarm be perceived?’” says Stillman.

Alarms are a subject of constant debate, she adds. Consider: How can a device issue a warning without sounding alarming? Stillman recalls one device that issued frequent alarms that were so annoying, ER nurses kept the device off. “Its designers hadn’t thought how distracting the alarm would be in a busy emergency room. It became easier to just turn the darn thing off and check it every once in a while than to have it going off all the time,” she said.

What could possibly go wrong?

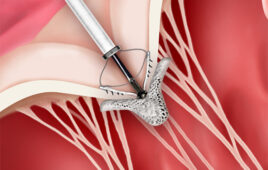

Lest you think conducting usability studies is an exercise in excess, consider this: “I was working on a system that delivers a drug, an implanted system with an implanted pump,” says McConnell. “We were doing an evaluation of that system, close to the summative study, and realized that when the nurse held it to enter the prescription from the physician, the field where the nurse would enter a value for the amount of drug was constructed in such a way that the nurse could misinterpret where the decimal point fell. That meant the nurse could unintentionally program the device to deliver much more drug than intended. Fortunately, the flaw was caught and corrected before the system went to production.”

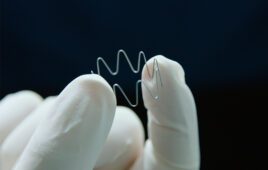

In a final instance, a device intended to deliver a medication through an injection could be used incorrectly and in a way that people could inadvertently poke themselves. “That’s pretty common. Anybody who has a story about an injection device can tell you what happens when you use it wrong, and what part of your body gets the injection,” says Stillman.

Good enough or perfect? When to release a device

“What’s the minimum we need to do to earn FDA approval?” is the wrong question, say McConnell and Stillman. The answer varies, depending on the device. Developers naturally ask because they have a limited budget and a schedule. “We bring value to the process by knowing what the FDA expects of medical device manufacturers and fitting this into the context of a particular business’s needs,” says McConnell.

To evaluate an initial medical device prototype, a Battelle human centric design staffer uses it on a mannequin as part of an early formative study.

FDA guidance talks about acceptability. “The people who wrote the guidance understand that at some point, you have to launch the device and make money for the company to stay in business.” Users want the device when it’s good for them, meaning reasonably usable and always safe for use. They don’t want to wait until it’s perfect. The idea of ‘good enough’ to be safe and reasonably useful is universal. A medical device manufacturer wants to make it good enough, the users want it to be good enough, the FDA expects it to be good enough. All these parties know that there will be an evolution in the product design. ‘Good enough’ is kind of the ‘secret sauce’ to bringing a product to market which is safe and useful, but not perfect,” says McConnell.

So the right question is, “What is acceptable?” McConnell adds that her team and others can do as much refinement as one has time for, but at some point, there has to be that trade-off of, it’s good enough to be safe and effective, and that’s what it needs to be.