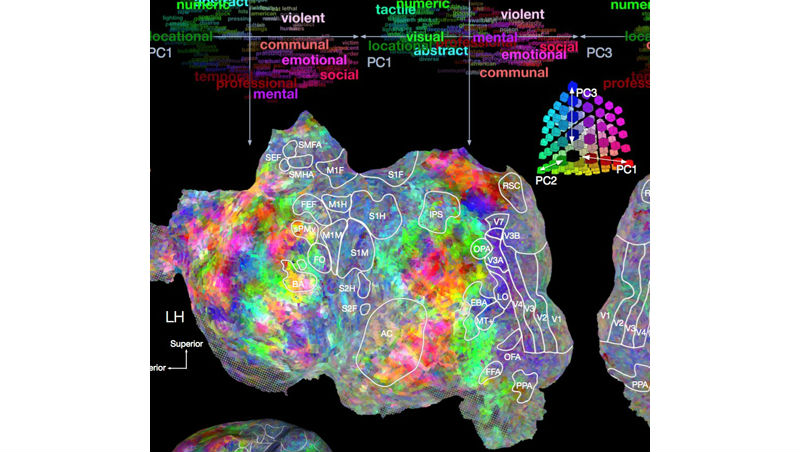

With the help of Big Data methods, this research aims to systematically map the semantic system in order to produce a detailed semantic atlas of the human brain

Here in the functional MRI room at the University of California, Berkeley, it’s story time. All in all, getting a brain scan for this project isn’t a bad gig — just kick back, listen to some stories and watch some videos. But, it’s far from a midday break for the scientists conducting this research project.

With support from the National Science Foundation (NSF), neuroscientist Jack Gallant and his team are discovering how language-related information is represented and processed in the human brain. Using functional MRI, they measure changes in blood flow throughout the brain about once every second while people listen to natural narrative stories. The researchers then use Big Data methods to construct mathematical models of language processing and create detailed maps that show how different aspects of language are represented in different locations in the brain.

In previous work, they showed that they could use models of visual processing to decode the objects and actions in movies solely from brain activity, and it is possible that the new language models might also be useful for brain decoding. Gallant says the practical applications could one day include new therapies to help stroke patients recover language skills, designs for faster computers and even brain-machine interfaces that would allow communication without speech. Findings from this research have been published in the journal Nature.

What if a map of the brain could help us decode people’s inner thoughts? Scientists at the University of California, Berkeley, have taken a step in that direction by building a “semantic atlas” that shows in vivid colors and multiple dimensions how the human brain organizes language. The atlas identifies brain areas that respond to words that have similar meanings. (Credit: Alex Huth, UC Berkeley)

The research in this episode was supported by NSF award #1208203, Cortical representation of phonetic, syntactic and semantic information during speech perception and language comprehension. The award was funded through NSF’s Collaborative Research in Computational Neuroscience (CRCNS) program.