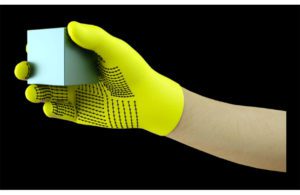

An inexpensive sensor-embedded glove may help in robotics and prosthetics design. (Image from Massachusetts Institute of Technology)

Researchers at the Massachusetts Institute of Technology have developed a low-cost, sensor-packed glove that, combined with a massive dataset, enables an AI system to recognize objects through touch alone.

The glove captures pressure signals as humans interact with objects. It then generates high-resolution tactile datasets that robots can use to better identify, weigh, and manipulate objects. The researchers believe the glove and AI system may aid in prosthetics design.

The knitted “scalable tactile glove” (STAG) has about 550 tiny sensors embedded across nearly the entire hand. Each sensor captures pressure signals as humans interact with objects in various ways. A neural network processes the signals to “learn” a dataset of pressure-signal patterns related to specific objects. Then, the system uses that dataset to classify the objects and predict their weights by feel alone, with no visual input needed, according to the university.

In a paper recently published in Nature, the researchers describe a dataset they compiled using STAG for 26 common objects, including a soda can, scissors, tennis ball, spoon, pen and mug. Using the dataset, the system predicted the objects’ identities with up to 76% accuracy. The system can also predict the correct weights of most objects within about 60 grams, the research showed.

Similar sensor-based gloves used today run thousands of dollars and often contain only around 50 sensors that capture less information. Even though STAG produces very high-resolution data, it’s made from commercially available materials totaling around $10. The tactile sensing system could be used in combination with traditional computer vision and image-based datasets to give robots a more human-like understanding of interacting with objects, according to the researchers.

“Humans can identify and handle objects well because we have tactile feedback. As we touch objects, we feel around and realize what they are. Robots don’t have that rich feedback,” said researcher Subramanian Sundaram in a news release. “We’ve always wanted robots to do what humans can do, like doing the dishes or other chores. If you want robots to do these things, they must be able to manipulate objects really well.”

The system could be combined with the sensors already on robot joints that measure torque and force to help them better predict object weight, according to Sundaram.

“Joints are important for predicting weight, but there are also important components of weight from fingertips and the palm that we capture,” he said.