Source: Grace Jean, Office of Naval Research

Medical researchers are demonstrating that Office of Naval Research (ONR)-funded software developed for finding and recognizing undersea mines can help doctors identify and classify cancer-related cells. “The results are spectacular,” said Dr. Larry Carin, professor at Duke University and developer of the technology. “This could be a game-changer for medical research.”

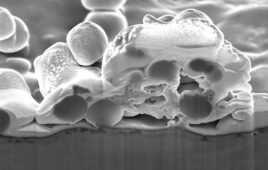

The problem that physicians encounter in analyzing images of human cells is surprisingly similar to the Navy’s challenge of finding undersea mines. When examining tissue samples, doctors must sift through hundreds of microscopic images containing millions of cells. To pinpoint specific cells of interest, they use an automated image analysis software toolkit called FARSIGHT, or Fluorescence Association Rules for Quantitative Insight. It identifies cells based upon a subset of examples initially labeled by a physician. However, the resulting classifications can be erroneous because the computer applies tags based on the small sampling.

By adding ONR’s active learning software algorithms, the identification of cells is more accurate and consistent, researchers said. The enhanced toolkit also requires physicians to label fewer cell samples because the algorithm automatically selects the best set of examples to teach the software. A medical team at the University of Pennsylvania is applying the ONR algorithms, embedded into FARSIGHT, to examine tumors from kidney cancer patients. Focusing on endothelial cells that form the blood vessels that supply the tumors with oxygen and nutrients, the research could one day improve drug treatments for different types of kidney cancer, also known as renal cell carcinoma.

It usually takes days, even weeks, for a pathologist to manually pick out all the endothelial cells in 100 images. The enhanced FARSIGHT toolkit can accomplish the same feat in a few hours with human accuracy.

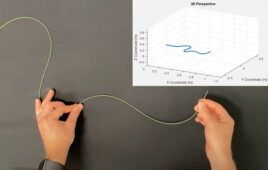

ONR’s active learning software was originally developed to allow robotic mine-hunting systems to behave more like humans when they are uncertain about how to classify an object. Using information theory, the software asks a human to provide labels for those items. This feature is valuable in mine warfare, where identifying unknown objects beneath the ocean has been accomplished traditionally by sending in divers.