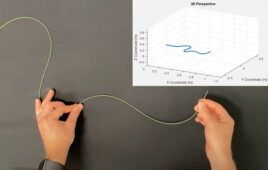

PhD student Yao Li demonstrates the technology, which uses a brain-computer interface to send signals to a robot. [Photo courtesy of the University of Illinois]

Industrial and enterprise systems engineering professor Thenkurussi (Kesh) Kesavadas and PhD student Yao Li had a camera take pictures of objects on a conveyor belt and they put those pictures on a computer in front of a person who was wearing a helmet with sensors. The person then has to look at the picture to determine if the object is a defect or not, and if it is, the brain will emit a particular frequency which tells the robot to remove the object from the conveyor belt.

The technique, Steady State Visually Evoked Potentials (SSVEP), uses the signals that the brain naturally emits at certain frequencies. When a visual signal stimulates the eyes at a frequency range of 3.5 Hz to 75 Hz, the brain releases electronic signals of the same frequency level. IN a nutshell, the brain is mimicking the frequency of the visual stimulus.

“The signals from the brain are very similar for everybody, and we know which part of the brain gives certain signals. Implementing that in the real world is tougher in that through BCI, you have to pick up the signal precisely,” said Kesavadas.

This BCI controlling can help in high-volume manufacturing. Robots can be trained to determine what is a defect on the conveyor belt and remove it on their own.

“The robot is actually monitoring your thinking process,” Kesavadas said. “If the robot realizes you saw something bad, it should go take care of it. That is the fundamental idea in manufacturing we are trying to explore.”

Normal programming of robots requires a technician with a lot of expertise on the robots.

“Currently programming robots takes a significant amount of time and expertise and technicians who are fully trained to use them. In high volume manufacturing, the time for programming the robot is well spent. However, if you go into an unstructured environment, not just in manufacturing but even in agriculture or medicine, where the environment keeps changing, you don’t get nearly the return on your investment,” said Kasavadas.

Kesavadas is also working to have mind-controlled robots bring objects to paraplegics. However, a sensor will have to be placed in the brain by a surgeon.

“As we devise an external system to become much more consistent and reliable, it will benefit many people,” Kesavadas said. “Surgically placing the sensors is a more expensive, invasive and risky process.”

This research comes after University of Minnesota biomedical engineering professor and researcher Bin He and his team announced that they developed a non-invasive method to control robotic arms using only thoughts.

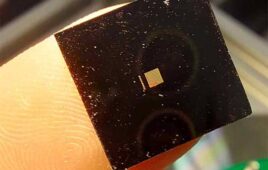

He and his team also used BCI to process operator thoughts into machine learning. The technique was called electroencephalography (EEG) based BCI. It recorded weak electrical activity in the brain through an EEG cap with 64 electrodes. All the operator had to do was think about moving the robotic arm in a 3D space and the robotic arm responded with an 80% success rate. Moving the object from a table to a shelf had a 70% success rate.

“Just by imagining moving their arms, they were able to move the robotic arm,” said He.

Kesavadas wants his research to benefit manufacturing and presented his research to the National Science Foundation in December.

“Until now, there has been no research in using brain-computer interfacing for manufacturing,” Kesavadas said.

“Our goal at the onset was to prove these technologies can actually work and that the robots can be used in a more friendly way in manufacturing. We have done that. The next stage is to coordinate with industries that would need this kind of technology and do a demonstration in a real-life environment. We want industry to know the potential of this technology, ignite the thinking process and how they can use the role of brain-computer interface as a whole to bring a more competitive edge to the industry,” said Kesavadas.

[Want to stay more on top of MDO content? Subscribe to our weekly e-newsletter.]